Jul 1, 2025

Large Action Models (LAMs) - Everything You Need to Know

Discover what Large Action Models (LAMs) are, how they differ from Large Language Models, and why they’re the next AI revolution. Explore real-world examples in web automation, AI agents, robotics, and more

Web3

AI

Automation

Be the first to access our Large Action Model.

Get Paid to Train AI

Personal data is never stored

What is a Large Action Model (LAM)?

You’ve probably heard of Large Language Models (LLMs) like GPT or Claude - the AI that can write essays, answer complex questions, and even spin up poetry.

But what if AI could go beyond just talking? What if it could actually do things? That’s where the Large Action Model (LAM) comes in.

A Large Action Model is an AI system trained not just to understand language, but to take real-world actions. It can click, scroll, book tickets, manage apps, run workflows, and even interact with robotics. Where an LLM gives you an answer, a LAM carries out the task.

Imagine saying: “Book me the cheapest flight to Lisbon next week, add it to my Google Calendar, and notify my boss.”

An LLM might suggest airlines or draft an email.

A LAM can actually book the flight, update your calendar, and send the email automatically.

This is why LAMs are such a breakthrough: they extend the usefulness of language models by pairing understanding with action-oriented reasoning. They don’t just answer questions, they act on them.

Real-life uses are already here: LAMs can handle travel bookings, manage refunds, run reports, or even design a post in Canva and publish it to Instagram, all without you lifting a finger.

With that in mind, let’s break down the key differences between LAMs and LLMs.

What’s the difference between a Large Action Model (LAM) and a Large Language Model (LLM)?

A Large Language Model (LLM) is a master of text. It writes essays, holds conversations, summarises documents, and translates languages, all through advanced language processing. But at the end of the day, LLMs are passive responders: they provide words, not actions.

A Large Action Model (LAM), on the other hand, goes beyond language. It acts. LAMs elevate comprehension into execution, turning instructions into tangible outcomes. They combine the linguistic intelligence of LLMs with the ability to interact across applications, APIs, software tools, and even robotics.

LAMs are goal-driven, context-aware, and designed to carry tasks through to completion, not just provide an answer. If LLMs are the “smart writers”, LAMs are the “smart doers.”

In essence:

LLMs: Experts at generating language-powered outputs. Great for conversation, content, and explanation, but their capabilities stop there.

LAMs: Built to turn intentions into actions. From scheduling interviews to entering data across platforms, LAMs can navigate and manipulate environments autonomously.

To read more about the difference between Large Action Models and Large Language Models, take a look at our comprehensive guide.

Large Action Model Examples

To understand how LAMs work in practice, let’s walk through two of the most compelling example types already in the real world:

1. AI Agents for Web Automation

One of the most practical applications of Large Action Models (LAMs) today is web automation.

OpenAI’s “Operator” showcases this potential by simulating human-like interactions - clicking, typing, and scrolling to automate workflows directly in the browser.

Google’s “Project Mariner” goes further, running as a Chrome extension that reads web pages, understands user intent, and autonomously navigates, fills forms, and completes tasks online.

Nanobrowser, an open-source option, enables multi-agent workflows that run locally with your own LLM key, though it’s currently geared toward more technical users.

Action Model, always save the best to last! We're a community-owned model that allows you to automate anything across the computer with no code or APIs.

With our browser extension, users don’t just enjoy no-code automation, they actively train a Large Action Model, earn rewards, and gain real ownership along the way. Unlike big tech, which monopolises the value created by AI, we’re building a community-driven model where contributors are true stakeholders, not just end users.

View the real use-cases examples for Action Model here.

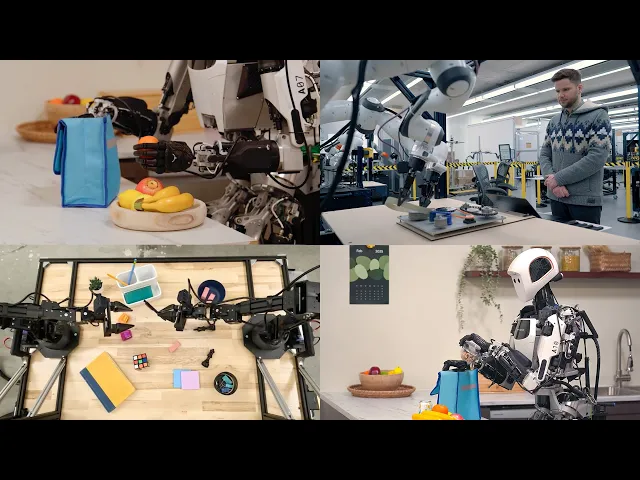

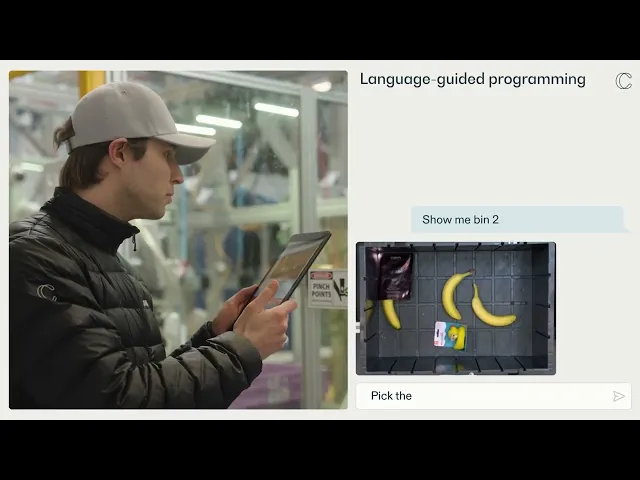

2. Robotics and IoT

Robots that can reason and act in the real world are no longer science fiction. Startups and research labs are already prototyping agents that can handle complex, multi-step tasks in homes, factories, and beyond.

Here are some of the most exciting examples where robotics and LAM-like systems are coming together:

Figure AI’s Helix – A Vision–Language–Action model that gives humanoid robots dexterity, collaboration skills, and the ability to manipulate unfamiliar household objects through natural-language prompts.

Google DeepMind’s Gemini Robotics – Embodied reasoning models that combine perception, reflexive responses, and deliberative planning, tested on humanoids like Apptronik’s Apollo.

Covariant’s RFM-1 – A robotics foundation model trained on visuals, text, sensors, and millions of robot actions, enabling warehouse robots to reason and manipulate objects with greater flexibility.

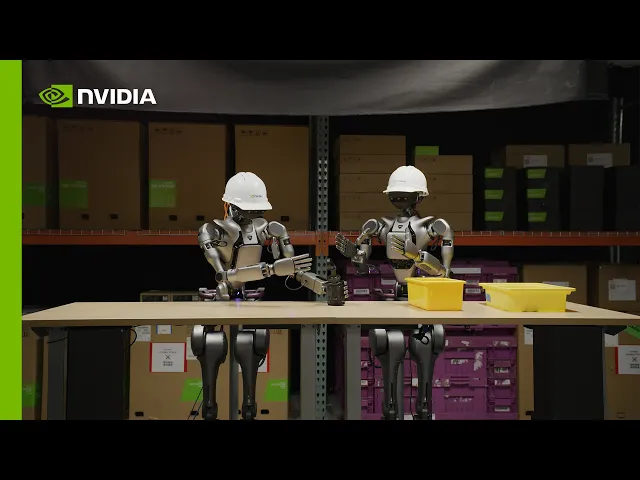

NVIDIA’s Isaac GR00T N1 – An open-source robotics model that fuses reflexive actions with higher-level planning, designed for humanoids and general-purpose autonomous tasks.

While many of these companies frame their advances under the banner of 'Agentic AI', it’s worth noting that the underlying mechanics often align with, or evolve toward Large Action Models. Understanding where the two concepts overlap helps clarify just how quickly robotics is moving toward true autonomous reasoning and action.

What is the difference between Large Action Models and Agentic AI?

It’s common for people to confuse Large Action Models (LAMs) with Agentic AI, because they’re closely related and often overlap.

Agentic AI is the broader concept. It refers to any AI system designed to act with autonomy. These systems can set goals, make decisions, and complete tasks with minimal human oversight. They perceive their environment, weigh options, and adapt through feedback loops, enabling complex, multi-step problem-solving in dynamic settings. Sound familiar?

Large Action Models (LAMs), on the other hand, are a specific implementation within this space. Think of them as the engines that can make agentic AI possible. LAMs are models trained to control computers like humans - clicking, typing, scrolling, and navigating software interfaces.

In other words: Agentic AI is the destination, LAMs is one of the vehicle that gets us there.

Now that we’ve drawn the line between the vision and the implementation, let’s explore how to train a Large Action Model.

How to Train a Large Action Model

Training a LAM is vastly more complex than training a static model. These models need to:

Understand goals and intentions

Map them to available actions

Adapt to changing environments

Learn from feedback and failure

The quality and structure of AI training data for LAMs determines their effectiveness.

Learn how we’re using our Chrome extension to help train our own Large Action Model.

Key Sources of Training Data for LAMs:

Enterprise & RPA Logs

Many businesses already use Robotic Process Automation (RPA) tools to automate repetitive tasks. These tools, along with enterprise systems like SAP or Oracle generate event logs: structured records of every step in a process (e.g., “log in → open report → export data”).

Why it matters: These logs act like blueprints of real business workflows. They show the order of actions, timing, and outcomes, which help LAMs understand how professional tasks are structured.

How it trains LAMs: By feeding these logs into training, a LAM can learn the skeleton of business processes before being fine-tuned on more detailed, user-level data.

Synthetic Environments

Since recording real user journeys is expensive and limited, researchers often create synthetic arenas where LAMs can practice. These environments provide structured, repeatable tasks for training and benchmarking.

However, in practice, their usefulness is limited compared to real-world interaction data. Synthetic setups tend to oversimplify reality, missing the complexity, unpredictability, and edge cases that Large Action Models must eventually handle. They’re essentially “toy worlds” that test baseline skills but don’t expose an AI to the messy, inconsistent, and context-rich environments of actual software used by people and businesses.

Examples:

MiniWoB++: hundreds of simple browser tasks like filling forms or clicking menus.

WebArena: realistic, fully functional websites where agents must complete long, multi-step goals.

Why it matters: In these environments, an agent can fail fast, reset instantly, and repeat thousands of times per minute, something impossible with real human data collection.

How it trains LAMs: They act like flight simulators for AI, letting models practice core navigation and error recovery until the skills generalise to real-world apps.

User Interaction Logs (Path Data)

This is the gold standard for training LAMs, and it’s exactly what we’re building at Action Model.

Path data is created when a real person’s journey through software is recorded, capturing:

Every click, keystroke, scroll, or drag

The screen state at each step

The goal or intent driving the actions

The sequence and timing of events

Why it matters: Unlike synthetic environments or enterprise logs, path data shows how humans actually get work done, with all the mistakes, corrections, and decision points that real life involves.

How it trains LAMs: By feeding these journeys into the model, LAMs learn to act with context, not just repeat patterns. But here’s the challenge: while text data is everywhere, action data is scarce and expensive. It has to be recorded intentionally, with context preserved.

At Action Model, we’ve solved this by building a Chrome extension that captures valuable interaction data while you browse. Contributors are rewarded in $LAM tokens, turning everyday digital activity into fuel for a smarter, fairer, community-owned Large Action Model.

Find out more about how we train our Action Model here.

Our Action Model & Vision

We’re building the world’s first Large Action Model community and we want to give you, the people, the power back.

Right now, AI models are using our data and profiting significantly from it. Our Model rewards those who train it - not big tech or billion pound corporations, but users like you.

By downloading the Action Model browser extension, you can help train our AI while earning $LAM tokens. This gives you tangible ownership in the future of AI, whilst earning in the background.

Get rewarded for what you use and share

Turn tokens into real influence over how the model runs

Launch your own workflows in the marketplace and earn every time they’re used

Help us develop the next level of AI web agents and get paid in the process!

Sign up to Action Model, create your own AI agents and start earning today.

Conclusion: The Next AI Revolution

So, hopefully you now understand how Large Action Models are creating a seismic shift in how we use AI. No longer limited to text or static outputs, these systems act in the real world, and that changes everything.

The tools are being built. The data is being collected. The future is being automated, and it’s moving fast. Don’t miss your chance to be part of the Large Action Model revolution.